- AI CODING CLUB

- Posts

- 👨💻 Xmas Computer Crash & Code Refactoring

👨💻 Xmas Computer Crash & Code Refactoring

I had a very busy holiday season, dealing with a nasty computer crash and some forced code refactoring.

Christmas Night Crash

On December 25th at 23:15, my AMD Ryzen Beelink Mini PC decided to call it a day, permanently.

Inside was an NVMe SSD holding a full year of projects. I was abroad at the time, which added to the challenge.

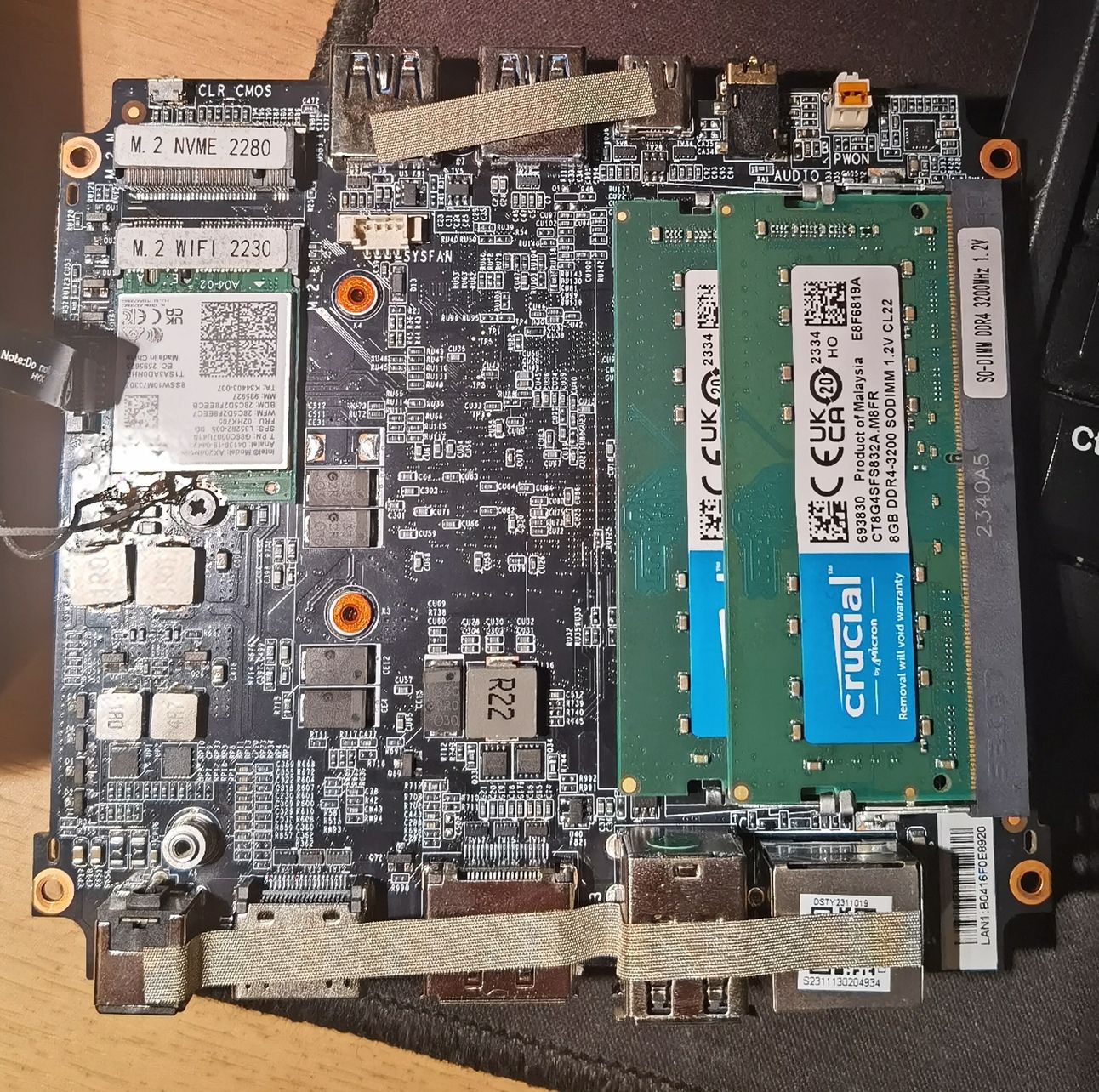

inside the Beelink, after removing the SSD

Extracting the Essentials

First, I had to remove the SSD from the Beelink.

One year of projects

While most of my code was safely backed up on GitHub, there were some recent local files I hadn’t yet pushed to the cloud.

These local .py files were critical, and retrieving them became my top priority.

Next, I tracked down a compatible external NVMe SSD enclosure.

If you ever need an SSD enclosure, make sure to verify whether your drive is a legacy SATA SSD or a more recent NVMe SSD, as the enclosures for these two types are not interchangeable.

Once I had it, I could safely transfer my scripts…

Good To Know to pick the right enclosure

M.2 Form Factor

M.2 is a physical form factor, not a protocol or technology. It defines the physical size and connection interface of the SSD.

M.2 drives can use either the SATA protocol or the NVMe protocol, depending on the drive.

M.2 SATA SSDs

M.2 SATA SSDs use the SATA protocol for data transfer, which means they are limited to SATA III speeds (~600 MB/s).

Internally, these drives connect to the motherboard's SATA controller, even though they plug into an M.2 slot.

They are typically labeled with terms like "M.2 SATA" to differentiate them from NVMe drives.

An M.2 SATA SSD will not work in an M.2 slot that only supports NVMe.

M.2 NVMe SSDs

M.2 NVMe SSDs use the NVMe protocol over PCIe lanes for significantly faster speeds (up to several GB/s).

They require an M.2 slot that supports NVMe and PCIe connectivity.

Upgrading to the Mac Mini M4

Back in London, it was clear I needed a replacement. Enter the Mac Mini M4.

The Mac Mini M4 offers incredible value at £475, featuring a 10-core CPU and 10-core GPU. It's remarkable how much computing power Apple has managed to pack into such a compact device.

🙏 On a side note, Amazon agreed to refund 100% of the Beelink purchase price (I was lucky—only 4 days left before the end of the 12-month warranty).

I formatted the SSD, reinstalled it in the mini PC, and returned the device to AMZ.

But switching systems meant setting up my development environment from scratch:

installing Homebrew

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

Installing

ffmpegvia Homebrew

brew install ffmpegInstalling node.js to use

npm

brew install nodeInstalling all Python packages from my requirements.txt files after setting up a virtual environment for each project (I’ve used Python 3.10.3 for most projects).

python3.10 -m venv venv

source venv/bin/activate

pip install -r requirements.txtIt was tedious but necessary to get back to work quickly.

Refactoring the AI Jingle Maker

Adding to the complexity, two days before the Christmas Crash, my deployment platform, Railway, made a “small” infrastructure change that forced a refactor of the AI Jingle Maker.

My web app and MySQL database, which had previously operated in the same region, were now in two different regions, even though they remained connected via Railway’s private network.

This introduced a slight lag that required more efficient handling of database queries. To mitigate this, I implemented MySQL DB Connection Pooling and improved the efficiency of my SQL queries.

from flask import current_app, g

import mysql.connector

from mysql.connector import pooling, Error

import os

from dotenv import load_dotenv

from contextlib import contextmanager

from threading import Lock

from uuid import uuid4

load_dotenv()

POOL_CONFIG = {

'pool_name': 'mypool',

'pool_size': 32,

'pool_reset_session': True,

'host': os.getenv('MYSQLHOST'),

'user': 'root',

'password': os.getenv('MYSQLPASSWORD'),

'database': os.getenv('MYSQLDATABASE'),

'port': int(os.getenv('MYSQLPORT', 3306)),

'connect_timeout': 3,

'autocommit': True

}

connection_pool = mysql.connector.pooling.MySQLConnectionPool(**POOL_CONFIG)

active_connection_ids = set()

connection_lock = Lock()

@contextmanager

def get_db_connection():

conn = None

conn_id = str(uuid4())

try:

with connection_lock:

conn = connection_pool.get_connection()

active_connection_ids.add(conn_id)

yield conn

except Error as e:

raise

finally:

if conn:

try:

with connection_lock:

active_connection_ids.discard(conn_id)

conn.close()

except Error as e:

pass

def init_app(app):

app.teardown_appcontext(close_db)

app.teardown_request(close_db)

def get_db():

if 'db' not in g:

try:

conn_id = str(uuid4())

with connection_lock:

conn = connection_pool.get_connection()

active_connection_ids.add(conn_id)

g.db = conn

g.db_id = conn_id

g.db_used = True

return g.db

except Exception as e:

raise

return g.db

def close_db(e=None):

try:

db = g.pop('db', None)

conn_id = g.pop('db_id', None)

db_used = g.pop('db_used', False)

if db is not None and db_used and conn_id in active_connection_ids:

try:

with connection_lock:

if db and hasattr(db, 'is_connected'):

try:

if db.is_connected():

db.reset_session()

db.close()

except Exception as e:

pass

active_connection_ids.discard(conn_id)

except Exception as e:

pass

except Exception as e:

passExample of a connection:

with get_db_connection() as db_conn:

cursor = db_conn.cursor()Why should you implement connection pooling?

Every interaction between your Flask application and the database involves opening and closing connections. While this may seem trivial for a single request, the cumulative cost becomes significant under high traffic.

Opening a connection involves establishing a TCP handshake, authenticating credentials, and preparing the session—steps that add latency.

Connection pooling eliminates these repetitive operations by maintaining a pool of reusable connections, significantly improving response times.

Here’s a quick comparison:

Without Connection Pooling:

Create a new connection → Execute Query → Close the connection (Repeat for every query).

With Connection Pooling:

Reuse an existing connection from the pool → Execute Query → Return the connection to the pool.

By avoiding the overhead of constant connection creation and teardown, you save valuable resources and ensure consistent performance.

While the changes were unplanned, they significantly optimized the codebase and prepared it for future scalability.

Lessons Learned

This Christmas Night crash reinforced the importance of backups and having contingency plans for your hardware and workflow.

Holding the SSD was a stark reminder of how much we rely on (tiny pieces of) technology to store our work and creativity.

Even with most scripts backed up, the local files I recovered were a crucial part of keeping everything intact.

I also learned a lot from the forced refactoring episode, thanks to the kind assistance of Brody from Railway’s Support Team, who generously granted me some free credit for the hassle.

It’s been a hectic holiday season, but I’m starting the new year with an upgraded setup, a refactored project, and a better appreciation for robust systems.

Here’s to a smoother year ahead!

Happy New Coding Year! 🥂

PS: Next time, I’ll share the results of my first experiments with Claude AI, which I’ve just added to my toolbox.